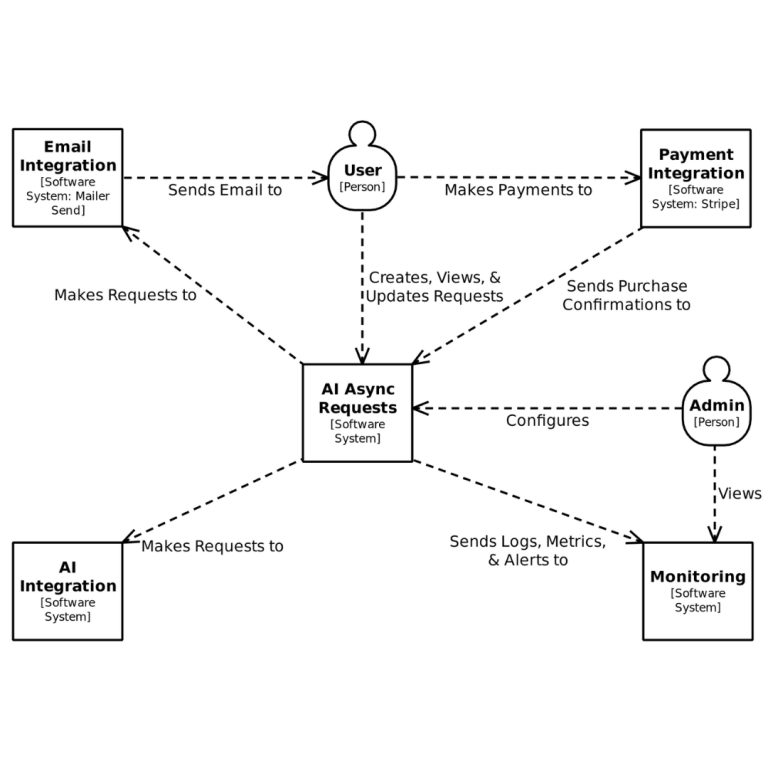

Application Components

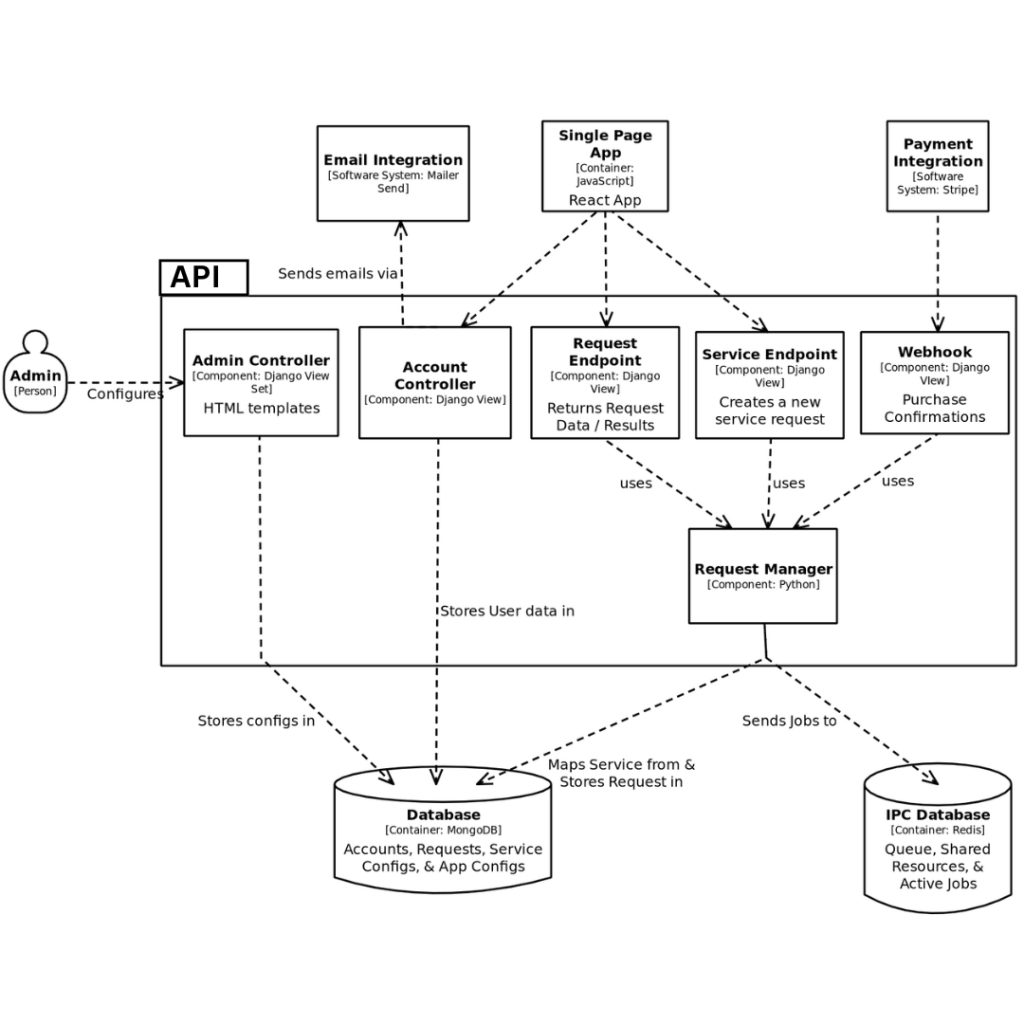

API (Django)

RESTful API service handling client requests and service configuration

User authentication and request management

Service configuration through admin interface

Request validation and queue management

Executor

Asynchronous handler for AI service integration

Task management and execution

Allocation and monitoring of shared resources like integration credits and maximum concurrent requests

Supports multiple AI service handlers

Recon

Request monitoring system

Ensures request completion

Resource usage tracking

System health monitoring

System Design

Service Handlers

```

id: <module>.<method> # Unique handler identifier

credits: <integer> # Internal API usage cost

“`

Service Offers

```

price: integer # Platform fee for the action

prompt_template: string # Template string with {variables}

handler: ServiceHandler # Associated handler entry

create_qty: integer # Number of results to generate

expiration: integer # Optional: Result expiration time in seconds

params: object # API integration configuration parameters

“`

/svc/<service>/<action>

Service Deals

```

Included Offers:

offers: [Service Offers] # Required service offers

price: integer # Fixed price for the deal

price_modifier: integer # Percentage discount on cumulative offer prices

Optional Add-ons:

addons: [Service Offers] # Optional service offers

addons_modifier: integer # Percentage discount on selected add-ons

“`

- Primary offers: Either fixed price or modified cumulative price

- Add-ons: Modified cumulative price of selected services

Pipelines:

Request Workflow

Post request made by user to

/svc/<service>/<action>/

- API translates service and action to handler, method, and prompt template.

- Payload values submitted in post request used to populate template.

- Request ID generated from hash of user and inputs (prevents spam request / accidental multiple submits)

- Request object stores Mongo request collection

- Job message is built and sent to queue

Infrastructure

Platform Administration

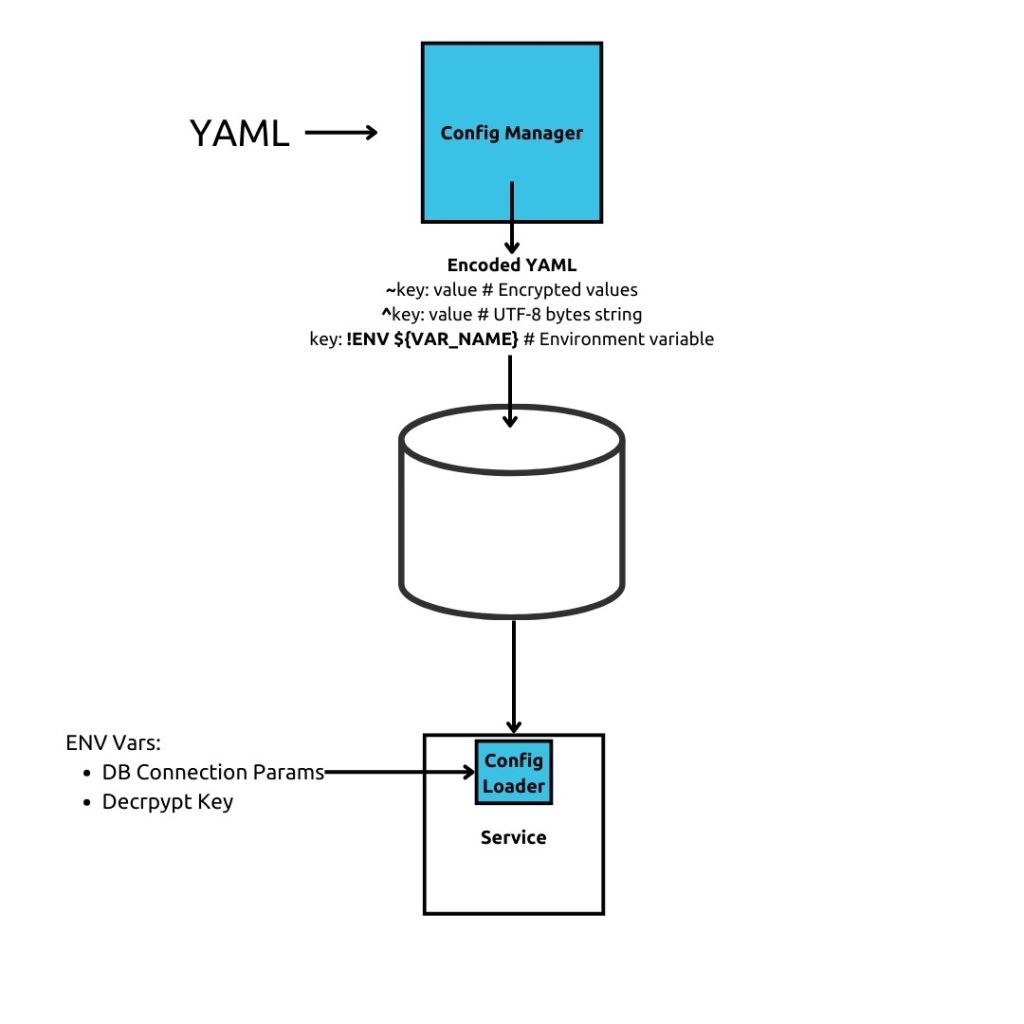

Configuration

# Special Notations

~key: value # Encrypted values

^key: value # UTF-8 bytes string

key: !ENV ${VAR_NAME} # Environment variable

version: 1.0

meta:

source: mongo

collection: !ENV${CFG_COLLECTION}

ids: # config, version

api: 1.0

service_map: 1.0

mongo:

uri: !ENV${MONGO_URI}

port: !ENV${MONGO_PORT}

db: !ENV${MONGO_DB}

# Key to decrypt configs

fernet_key: !ENV ${decrypt_key}

Logging and Monitoring

The Logging and Monitoring setup leverages the EFK stack for centralized log management and observability. A Prometheus exporter is configured for the Django API, enabling real-time monitoring. Custom Prometheus metrics in the Executor track total requests processed and job processing time, providing insights into system performance. To enhance traceability, the logging context includes a request ID, ensuring logs can be correlated across services. Grafana is used for visualization, offering clear and actionable insights into system health and performance.

Adding New Service Handlers

Future enhancements

- Websocket connection for request processing statuses

- Kafka topics and configure handler specific Executors